Mid-September 2019 Facebook announced first steps in setting up an oversight board. This will be a body for Facebook’s users to appeal the companies content decisions. A quick summary:

- This is practically a pre-announcement, most detailed decisions are not yet made

- The release of the charter comes a day before Facebook will join its peers from Google and Twitter at a hearing on Capitol Hill to probe how social media contributes to real-world violence. (source)

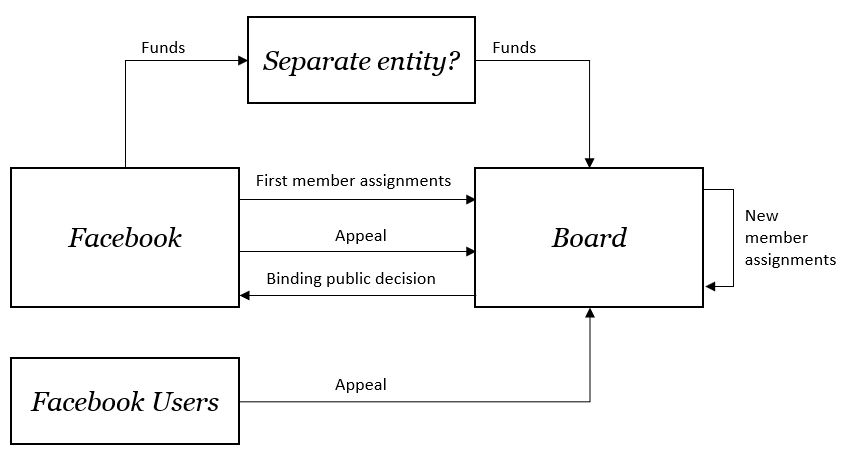

- The Board will be independent of Facebook

- The Board will be funded by Facebook, but potentially through a separate entity.

- First members of this Board will be appointed by Facebook, later members (40 altogether) will be appointed by existing members

- List of members will be public, their compensation and term fixed (3 years)

- The Board will only make decisions about Facebook’s contested content moderation decisions

- Both users and Facebook itself can refer cases to the Board

- Board will make anonymous decisions in rotating panels of of an odd number of its members

- Boards decisions will be binding on Facebook

- While Facebook promises that details will be defined following consultation with experts, global workshops, and public proposals, no details or channels are defined

Here’s how I understand the setup:

To say the least, it is strange that this announcement from a born-on-the-web company to provide more control to Facebook’s users comes in the form of two PDFs (charter and infographic), and a blog post not allowing comments.

It is also strange that for policing itself, Facebook sets up an independent body, rather than internal processes and safeguards. Though in Facebook’s case, this might be the right choice given the amount on controversy it has received, and inaction it has taken. The minute Apple announces an iPhone, you can preorder. Facebook should have at least specified channels for experts to volunteer their input, but ideally also channels to hear from its users.

And finally, it is strange that the company that gave voice to the masses builds up an oversight mechanism borrow from the check and balances of states, rather than figuring out how to use its community.

Nonetheless, I am happy to see a huge organization making first steps to start balancing out its power over its users.